TIBCO Cloud Integration, formerly known as TIBCO Scribe Online, is a cloud-based data integration tool that helps businesses sync data between two existing applications. TIBCO Cloud Integration has a wide variety of connectors, allowing it to extract data from HubSpot, Google Analytics, Salesforce, Amazon Redshift, Amazon S3, Dropbox, databases, and much more.

While TIBCO Cloud Integration offers code-free customization, the fusionSpan team has been leveraging code to get the most out of the TIBCO Cloud Integration. Successful projects include creating pipelines using API calls, integrating pipeline notifications to Slack, automating FTP uploads, and writing complex SQL processes.

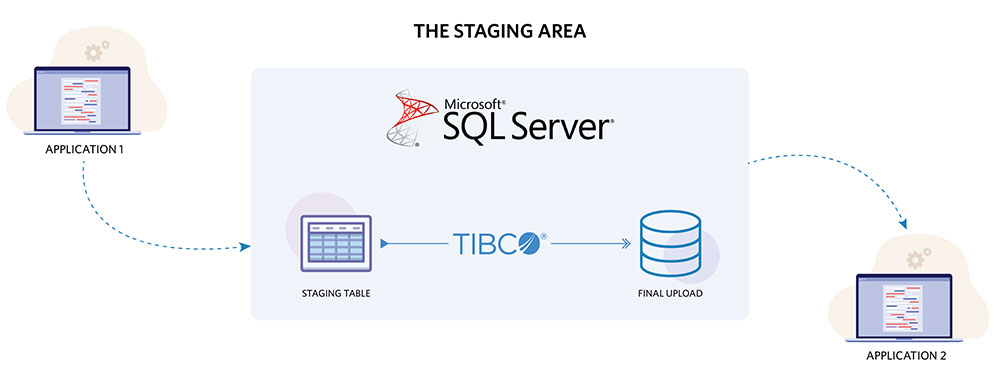

In one of our projects to send data from one system to another, we leverage SQL and the TIBCO Cloud Integration to ensure quick and efficient data transfer. The pipeline we built allows data to go through multiple stages of transformation before reaching its final destination.

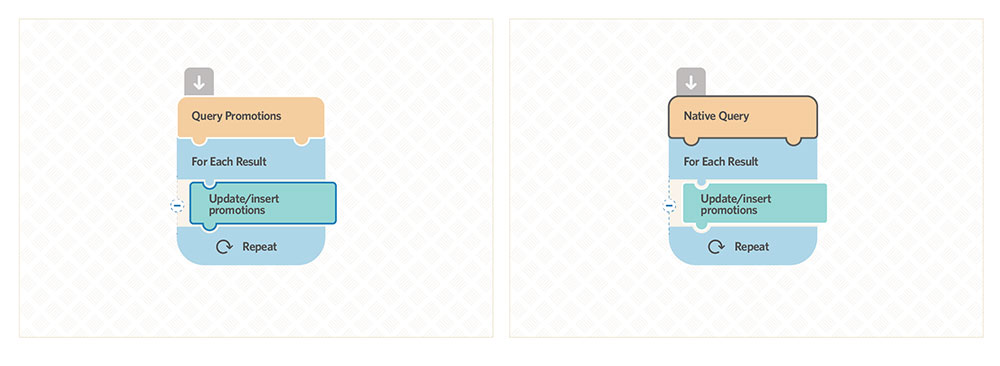

So you might be wondering, how are these pipelines built with TIBCO Cloud Integration?